Bitcoin Atlantis is just around the corner. This conference, happening here in Madeira, is something unusual for us locals. The common pattern is that we have to fly to attend such conferences.

I plan to attend the event, and I will be there with an open mindset, since there are always new things to learn. I’m particularly interested in the new technologies and advancements powering the existing ecosystem.

So this post is an early preparation for the conference. I noticed that the community is gathering increasingly around a protocol called Nostr. I also noticed that a great deal of new cool applications are being built on top of the Lightning Network.

To blend in, to actively participate and to make the most of my time there, I just set up my Nostr presence with a lightning address pointing to one lightning network wallet.

This setup allows me to interact with others using a Twitter/X like interface. Instead of likes and favorites, people can express their support by sending small bitcoin tips (zaps) directly to the authors by clicking/tapping a button.

Before describing what I did, I will do a rapid introduction to Nostr and also the Lightning Network, to contextualize the reader.

Lightning network

Built on top of the Bitcoin blockchain, the lightning network allows users to send and receive transactions rapidly (couple of seconds) and with minimal fees (less than a cent). These records are kept off-chain and are only settled later when the users decide to make the outcome permanent.

It works very well and led to the development of many websites, apps, and services with use cases that weren’t previously possible or viable. One example is quickly paying a few cents to read an article or paying to watch a stream by the second without any sort of credit.

One of the shortcomings is that for every payment to happen, the receiving party needs to generate and share an invoice first. For certain scenarios, this flow is not ideal.

Recently, a new protocol called LNURL was developed to allow users to send transactions without the need of having a pre-generated invoice first. The only thing that is needed is this “URL”, which can work like a traditional address.

On top of this new protocol, users can create Lightning Addresses, which looks and works just like an e-mail address. So, you can send some BTC to joe@example.com, which is much friendlier than standard bitcoin addresses.

Nostr

Twitter is centralized, Mastodon is not, but it is federated, which means you still need an account on a given server. Nostr, on the other hand, is an entirely different approach to the social media landscape.

Just like Bitcoin, users generate a pair of keys, one is the identifier and the other allows the user to prove is identity.

Instead of having an account where the user stores and publishes his messages, on Nostr the user creates a message, signs and publishes it to one or more servers (called relay).

Readers can then look for messages of a given identifier, on one or more servers.

One of the characteristics of this approach is that it is censorship resistant, and the individual is not at the mercy of the owner of a given service.

The relationship between Nostr and the Bitcoin’s lightning network, that I described above, is that the Bitcoin community was one of the first to adopt Nostr. Furthermore, many Nostr apps already integrated support for tipping using the lightning network, more specifically using lightning addresses.

My new contacts

With that long introduction done, this post serves as an announcement that I will start sharing my content on Nostr as well.

For now, I will just share the same things that I already do on Mastodon/Fediverse. But as we get closer to the conference, I plan to join a few discussions about Bitcoin and share more material about that specific subject.

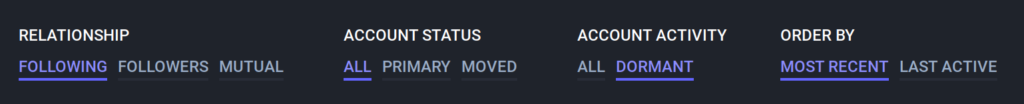

You can follow me using:

npub1c86s34sfthe0yx4dp2sevkz2njm5lqz0arscrkhjqhkdexn5kuqqtlvmv9

View on Primal

To send and receive some tips/zaps, I had to set a lightning address. Unfortunately, setting up one from scratch in a self-custodial way still requires some effort.

The app that I currently use can provide one, but they take custody of the funds (which in my humble opinion goes a bit against the whole point). This address uses their domain, something like: raremouse1@primal.net. It doesn’t look so good, especially if I want to switch providers in the future or have self-custody of my wallet.

Looking at the Lightning Address specification, I noticed that it is possible to create some kind of “alias”, that allows us to easily switch later.

To test, I just quickly wrote a Cloudflare worker that essentially looks like the following:

const url = 'https://primal.net/.well-known/lnurlp/raremouse1'

export default {

async fetch(request, env, ctx) {

async function MethodNotAllowed(request) {

return new Response(

`Method ${request.method} not allowed.`,

{

status: 405,

headers: {

Allow: "GET",

},

});

}

// Only GET requests work with this proxy.

if (request.method !== "GET")

return MethodNotAllowed(request);

let response = await fetch(url);

let content = await response.json();

content.metadata = content.metadata.replace(

"raremouse1@primal.net",

"gon@ovalerio.net"

);

return new Response(

JSON.stringify(content),

response

)

},

};

Then I just had to let it handle the https://ovalerio.net/.well-known/lnurlp/gon route.

The wallet is still the one provided by Primal. However, I can now share with everyone that my lightning address is the same as my email: gon at ovalerio dot net. When I move to my infrastructure, the address will remain the same.

And this is it, feel free to use the above script to set your lightning address and to follow me on Nostr. If any of this is useful to you, you can buy me a beer by sending a few sats to my new lightning address.