I’ve been participating in the Fediverse through my own mastodon instance since 2017.

What started as an experiment to test new things, focused on exploring decentralized and federated alternatives for communicating on top of the internet, stuck. At the end of 2024, I’m still there.

The rhetoric on this network is that you should find an instance with a community that you like and start from there. At the time, I thought that having full control over what I publish was a more interesting approach.

Nowadays, there are multiple alternative software implementations that you can use to join the network (this blog is a recent example) that can be more appropriate for distinct use cases. At the time, the obvious choice was Mastodon in single user mode, but ohh boy it is heavy.

Just to give you a glimpse, the container image surpasses 1 GB in size, and you must run at least 3 of those, plus PostgreSQL database, Redis broker and optionally elastic search.

For a multiple user instance, this might make total sense, but for a low traffic, single user service, it is too much overhead and can get expensive.

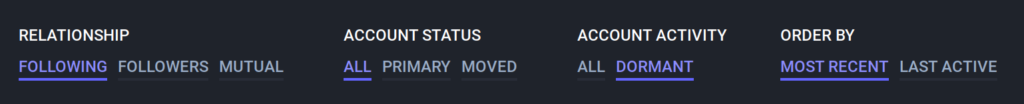

A more lightweight implementation, would fit my needs much better, but just thinking about the migration process gives me cold feet. I’m also very used to the apps I ended up using to interact with my instance, which might be specific for Mastodon’s API.

So, I decided to go in a different direction, look for the available configurations that would allow me to reduce the weight of the software on my small machine, in order words, run Mastodon on the smallest machine possible.

My config

To achieve this goal and after taking a closer look at the available options, these are the settings that I ended up changing over time that produced some improvements:

ES_ENABLED=false— I don’t need advanced search capabilities, so I don’t need to run this extra piece of software. This was a decision I made on day 1.STREAMING_CLUSTER_NUM=1— This is an old setting that manages the number of processes that deal with web sockets and the events that are sent to the frontend. For 1 user, we don’t need more than one. In recent versions, this setting was removed, and the value is always one.SIDEKIQ_CONCURRENCY=1— Processing background tasks in a timely fashion is fundamental for how Mastodon works, but for an instance with a single user, 1 or 2 workers should be more than enough. The default value is 5, I’ve used 2 for years, but 1 should be enough.WEB_CONCURRENCY=1— Dealing with a low volume of requests, doesn’t too many workers, but having at least some concurrency is important. We can achieve that with threads, so we can keep the number of processes as 1.MAX_THREADS=4— The default is 5, I reduced it to 4, and perhaps I can go even further, but I don’t think I would have any significant gains.

To save some disk space, I also periodically remove old content from users that live on other servers. I do that in two ways:

- Changed the

media cache retention periodto 2 anduser archive retention periodto 7, in Administration>Server Settings>Content Retention. - Periodically run the

tootctl media removeandtootctl preview_cards removecommands.

Result

In the end, I was able to reduce the resources used by my instance and avoid many of the alerts my monitoring tools were throwing all the time. However, I wasn’t able to downsize my machine and reduce my costs.

It still requires at least 2 GB of RAM to run well, even though with these changes, there’s much more breathing room.

If there is a lesson to be learned or a recommendation to be done with this post, it is that if you want to participate in the Fediverse, while having complete control, you should opt for a lighter implementation.

Do you know any other quick tips that I could try to optimize my instance further? Let me know.